Once the data has been extracted, you can automatically export it through Excel or the Octoparse API to send it directly to your own systems.

This cloud functionality can run 24 hours a day, and Octoparse regularly changes IP addresses to reduce the risk of being detected or blocked by web administrators. The scraping is funneled through the Octoparse cloud platform, which facilitates extraction at 6 to 20 times the speed of more conventional localized web scraping software. The program can also pull links, images, and HTML code. Scraping options aren't just limited to raw text. Whether the site you're looking is built on foundations of JavaScript, AJAX, or any other dynamic language, Octoparse has the tools to properly pull the information you need. There's no need to get intimidated by complicated websites.

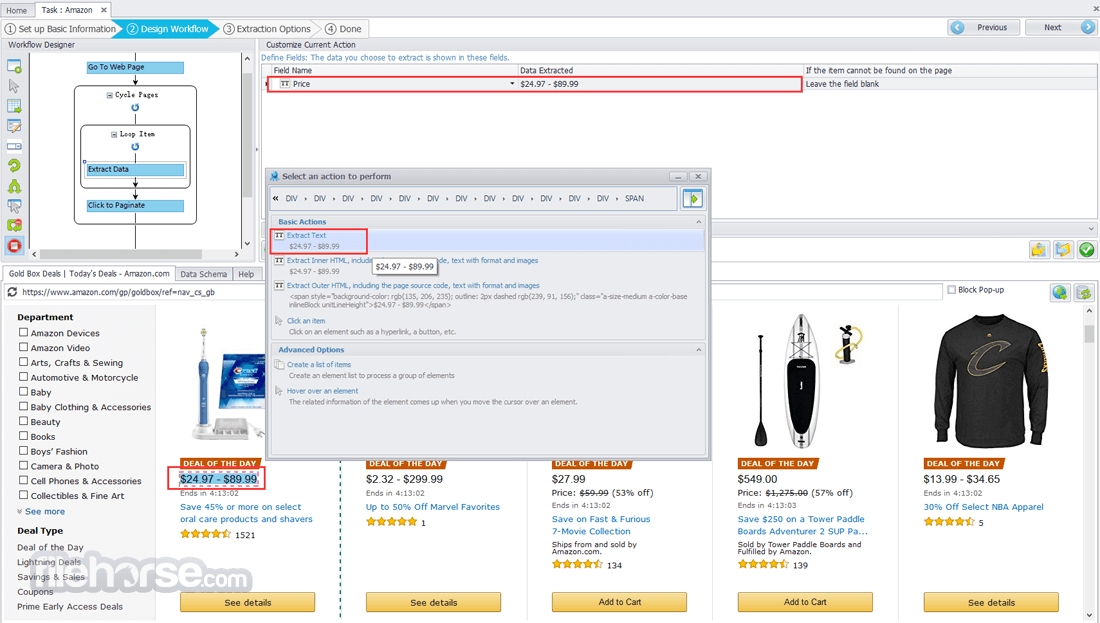

You can navigate to the site you're looking to scrape directly through the Octoparse browser and then set the criteria directly through its point and click interface. Octoparse allows users to create specialized crawlers, and the whole process is handled through a browser that resembles those used for surfing the web. Traditional data scraping relies on the construction of web crawlers that automatically patrol sites, look for information relevant to the users' needs, and compile them on a scheduled basis. Octoparse automates the process of compiling data and puts it into neatly aligned spreadsheets set to your parameters so you can easily access them yourself or transfer them over to more dynamic data analysis software. Properly pulling information from sites once required a deep understanding of a coding language like Python, but Octoparse puts the tools for extracting information from websites in the hands of everyone.

#Octoparse customer service professional#

Overall Opinion: Whether you're a sales professional in search of new leads or an academic researcher in need of external data, web scraping can be a valuable tool to help you get the information you need.

0 kommentar(er)

0 kommentar(er)